Wednesday, September 4. 2013

Keynote: VMware vCHS, Puppet, and Project Zombie

Sunday, September 1. 2013

Get up to speed at VMware WalkThroughs

This VMware product walkthrough website is designed to provide a step-by-step overview of how to configure several features like Data Protection, App HA, Replication and Flash Read Cache vCloud but also contains a great walkthrough for Virtual SAN and vCloud Director. here's a list with the current topics:

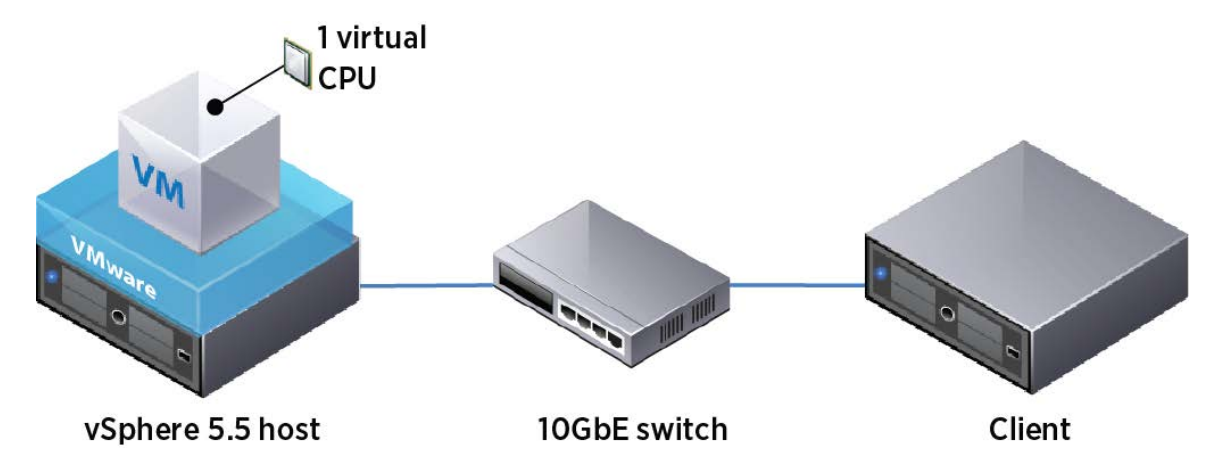

Deploying Extremely Latency-Sensitive Applications in VMware vSphere 5.5

Although virtualization brings the benefits of simplifying IT management and saving costs, the benefits come with an inherent overhead due to abstracting physical hardware and resources and sharing them.

Virtualization overhead may incur increased processing time and its variability. VMware vSphere ensures that this overhead induced by virtualization is minimized so that it is not noticeable for a wide range of applications including most business critical applications such as database systems, Web applications, and messaging systems.

vSphere also supports well applications with millisecond-level latency constraints such as VoIP streaming applications. However, certain applications that are extremely latency-sensitive would still be affected by the overhead due to strict latency requirements. In order to support virtual machines with strict latency requirements, vSphere 5.5 introduces a new per-VM feature called Latency Sensitivity.

Among other optimizations, this feature allows virtual machines to exclusively own physical cores, thus avoiding overhead related to CPU scheduling and contention. Combined with a pass through functionality, which bypasses the network virtualization layer, applications can achieve near-native performance in both response time and jitter.

- It explains major sources of latency increase due to virtualization, which are divided into two categories: contention created by sharing resources and overhead due to the extra layers of processing for virtualization.

- It presents details of the latency-sensitivity feature that improves performance in terms of both response time and jitter by eliminating the major sources of extra latency added by using virtualization.

- It presents evaluation results demonstrating that the latency-sensitivity feature combined with pass-through mechanisms considerably reduces both median response time and jitter compared to the default configuration, achieving near-native performance.

- It discusses the side effects of using the latency-sensitivity feature and presents best practices

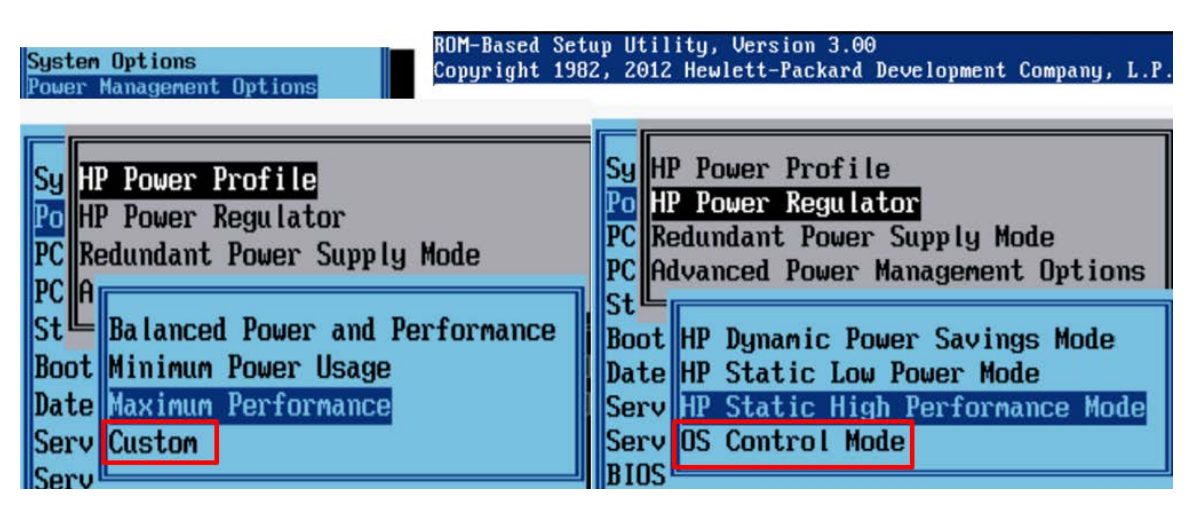

Host Power Management in VMware vSphere 5.5

vSphere Host Power Management (HPM) is a technique that saves energy by placing certain parts of a computer system or device into a reduced power state when the system or device is inactive or does not need to run at maximum speed. The term host power management is not the same as vSphere Distributed Power Management (DPM), which redistributes virtual machines among physical hosts in a cluster to enable some hosts to be powered off completely.

Host power management saves energy on hosts that are powered on. It can be used either alone or in combination with DPM. vSphere handles power management by utilizing Advanced Configuration and Power Interface (ACPI) performance and power states. In VMware vSphere 5.0, the default power management policy was based on dynamic voltage and frequency scaling (DVFS).

This technology utilizes the processor’s performance states and allows some power to be saved by running the processor at a lower frequency and voltage. However, beginning in VMware vSphere 5.5, the default HPM policy uses deep halt states (C-states) in addition to DVFS to significantly increase power savings over previous releases while still maintaining good performance.

Download White Paper: Host Power Management in VMware vSphere 5.5

Performance of vSphere Flash Read Cache in VMware vSphere 5.5

VMware vSphere 5.5 introduces new functionality to leverage flash storage devices on a VMware ESXi host. The vSphere Flash Infrastructure layer is part of the ESXi storage stack for managing flash storage devices that are locally connected to the server. These devices can be of multiple types (primarily PCIe flash cards andSAS/SATA SSD drives) and the vSphere Flash Infrastructure layer is used to aggregate these flash devices into a unified flash resource.

You can choose whether or not to add a flash device to this unified resource, so that if some devices need to be made available to the virtual machine directly, this can be done. The flash resource created by the vSphere Flash Infrastructure layer can be used for two purposes: read caching of virtual machine I/O requests (vSphere Flash Read Cache) and storing the host swap file. This paper focuses on the performance benefits and best practice guidelines when using the flash resource for read caching of virtual machine I/O requests.

vSphere Flash Read Cache (vFRC) is a feature in vSphere 5.5 that utilizes the vSphere Flash Infrastructure layer to provide a host-level caching functionality for virtual machine I/Os using flash storage. The goal of introducing the vFRC feature is to enhance performance of certain I/O workloads that exhibit characteristics suitable for caching.

In this paper, we first present an overview of the vFRC architecture, detailing the workflow in the read and write I/O path. We then show some of the workloads that perform better vFRC through detailed test results. We conclude the paper with performance best practices guidelines when using vSphere Flash Read Cache.

Download White Paper: Performance of vSphere Flash Read Cache in VMware vSphere 5.5