You’re about to enter a world where creating a Virtual Machine hot-clone is done faster than powering it off. My former Capgemini colleagues, Ernst Cozijnsen and John van der Sluis recently implemented EMC PowerPath/VE, here's their story.

It took the guys in storage land a long time to deliver.... But finally it's there.... A really great kick-ass plug-in to boost your vSphere 4 storage performance through the roof.

In prior versions of ESX the Native Multi Pathing “NMP” plug-in was available for balancing the storage load over different Fiber Channel HBA’s and storage paths to your storage array(s). Beside that it’s not really “Multi Pathing” it had another major disadvantage of being able to stress your storage array in such a way it could crash. (Yes.. we know how it works and yes… we succeeded in this). This crashing didn’t had much to do with ESX but more with how the storage arrays handle the different request coming in from the FA port and distributing the load across the storage processors inside the box.

In prior versions of ESX the Native Multi Pathing “NMP” plug-in was available for balancing the storage load over different Fiber Channel HBA’s and storage paths to your storage array(s). Beside that it’s not really “Multi Pathing” it had another major disadvantage of being able to stress your storage array in such a way it could crash. (Yes.. we know how it works and yes… we succeeded in this). This crashing didn’t had much to do with ESX but more with how the storage arrays handle the different request coming in from the FA port and distributing the load across the storage processors inside the box.

If commands for e.g. LUN-A come in via 2 different FA ports on the array which all have their own storage processor, there needs to be a lot of “inter communication” between the storage CPU’s inside the box. For a normal environment this is no issue but when you start to stretch the limit this can and will cause major concerns. Therefore I have written this script.

This script is run at boot time from rc.local which makes sure that all the ESX hosts in your environment will send their storage I/O via the same path to your storage box. The Storage CPU “inter communication” is there for kept to a minimum.

Disk vmhba2:1:4 /dev/sdh (512000MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:4 On active preferred

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:4 On

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:4 On

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:4 On

Disk vmhba2:1:5 /dev/sdi (512000MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:5 On

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:5 On active preferred

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:5 On

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:5 On

Disk vmhba2:1:6 /dev/sdj (307200MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:6 On

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:6 On

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:6 On active preferred

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:6 On

Disk vmhba2:1:7 /dev/sdk (307200MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:7 On

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:7 On

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:7 On

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:7 On active preferred

Disk vmhba2:1:8 /dev/sdl (512000MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:8 On active preferred

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:8 On

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:8 On

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:8 On

Disk vmhba2:1:9 /dev/sdm (512000MB) has 4 paths and policy of Fixed

FC 16:0.1 50060b0000646c8a<->50060e8004f2e812 vmhba2:1:9 On

FC 16:0.1 50060b0000646c8a<->50060e8004f2e873 vmhba2:2:9 On active preferred

FC 19:0.1 50060b0000646062<->50060e8004f2e802 vmhba4:1:9 On

FC 19:0.1 50060b0000646062<->50060e8004f2e863 vmhba4:2:9 On

Above output will be displayed by executing the command “esxcfg-mpath –l” on ESX 3.x

Only having 1 out of 4 paths available for transport you can imagine that there is a lot of wasted resources doing….. well… noting really ;-)

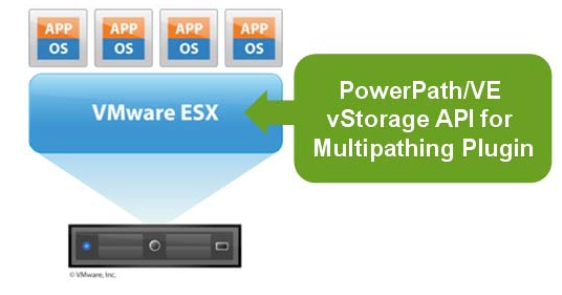

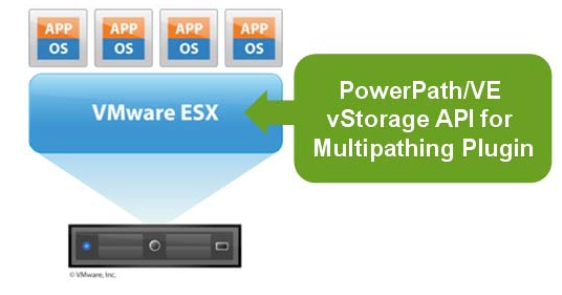

The story changes when VMware announced their vSphere 4.0 PSA (pluggable Storage Architecture). This enabled Storage manufactures to start developing storage related plug-ins for vSphere.

EMC was one of the 1st that modified their existing “PowerPath” software to fit into ESX calling it “EMC PowerPath/VE for VMware”. This plug-in enables you to really spread your storage load over all your HBA’s and storage paths. Finally a real “Multipath” plug-in with full load balancing capability’s

Being all hyped up about this nice thing there was only one way to find out if it did the trick. Let’s put this test into motion! PowerPath / VE comes with a huge set of whitepapers and best practices. Too much pages and to less time this is “The Nutshell version”. The topology ->

Being all hyped up about this nice thing there was only one way to find out if it did the trick. Let’s put this test into motion! PowerPath / VE comes with a huge set of whitepapers and best practices. Too much pages and to less time this is “The Nutshell version”. The topology ->

Because vSphere is the last ESX version with a Service Console installing this plug-in requires the “VMware Remote CLI” for remote pushing. As test we installed this on the “Virtual Center” server itself.

For managing the plug-in after installation the EMC RTOOLS CLI also need to be installed. It can be found together with the license server at http://powerlink.emc.com

Did I hear you say…. License server??? Yes isn’t it great? VMware finally stops using ELM-tools license manager because of the product friendliness and EMC re-imports it… Thanks EMC

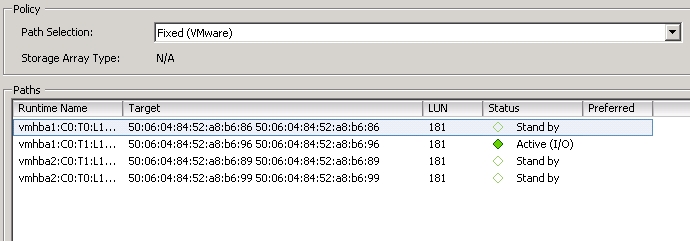

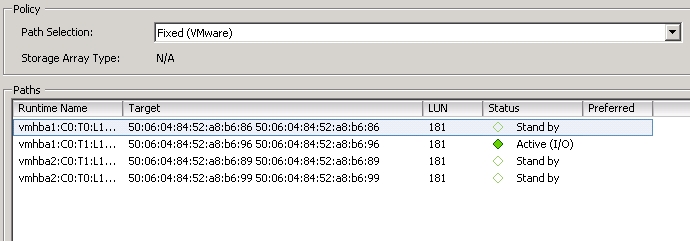

Anyway, After installing all the nice tools on the VC server and pushing the plug-in on/into ESX finally some progress. This is how your path policy looks before installing the plug-in.

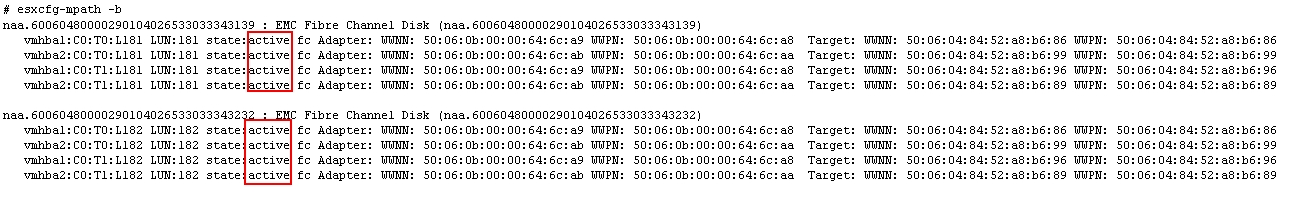

After installing the plug-in it looks like this:

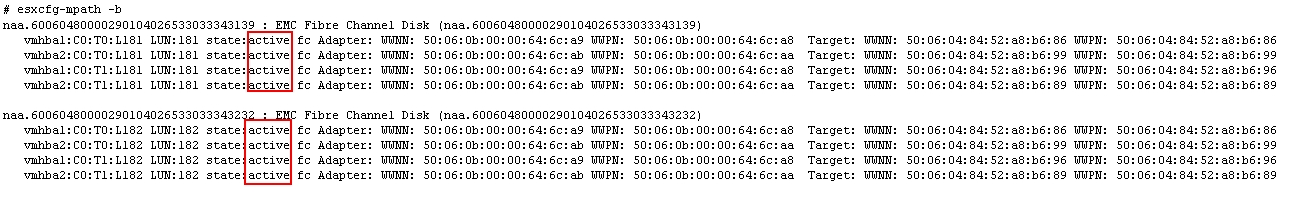

All paths enabled and ready to hurl loads of I/O to it, to Make sure the GUI says the same as the Service Console:

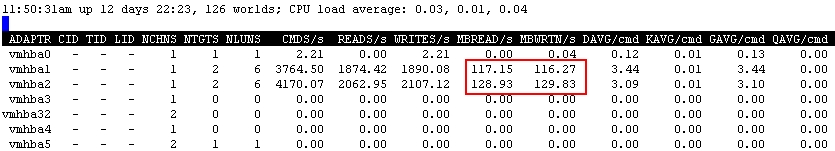

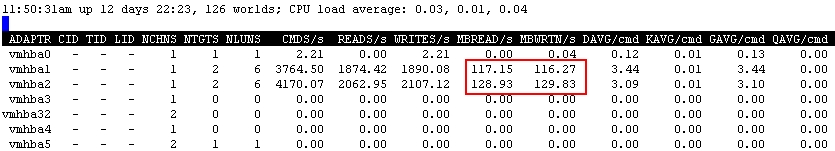

After doing some intensive speed testing we got the following result without any additional configuration changes.

A massive mind whopping 120MB/sec per HBA creating a total of 250MB/s read and 250MB/s write simultaneously, doing some quick math, 500MB/sec throughput means:

In one minute, this ->  ...is going in here ->

...is going in here ->

Like Montel Jordan says: http://www.youtube.com/watch?v=qZwcNu1xg_A

kudos to Ernst Cozijnsen and John van der Sluis.

More info at EMC:

http://www.emc.com/collateral/software/white-papers/h6533-performance-optimization-vmware-powerpath-ve-wp.pdf In prior versions of ESX the Native Multi Pathing “NMP” plug-in was available for balancing the storage load over different Fiber Channel HBA’s and storage paths to your storage array(s). Beside that it’s not really “Multi Pathing” it had another major disadvantage of being able to stress your storage array in such a way it could crash. (Yes.. we know how it works and yes… we succeeded in this). This crashing didn’t had much to do with ESX but more with how the storage arrays handle the different request coming in from the FA port and distributing the load across the storage processors inside the box.

In prior versions of ESX the Native Multi Pathing “NMP” plug-in was available for balancing the storage load over different Fiber Channel HBA’s and storage paths to your storage array(s). Beside that it’s not really “Multi Pathing” it had another major disadvantage of being able to stress your storage array in such a way it could crash. (Yes.. we know how it works and yes… we succeeded in this). This crashing didn’t had much to do with ESX but more with how the storage arrays handle the different request coming in from the FA port and distributing the load across the storage processors inside the box.

...is going in here ->

...is going in here ->