One of the coolest features of NSX-T 3.0 is the possibility to host NSX-T of a distributed switch instead of an N-VDS. Especially when the amount of network adapters is limited, it allows you to create segments managed by NSX-T alongside distributed port groups and other kernel ports groups.

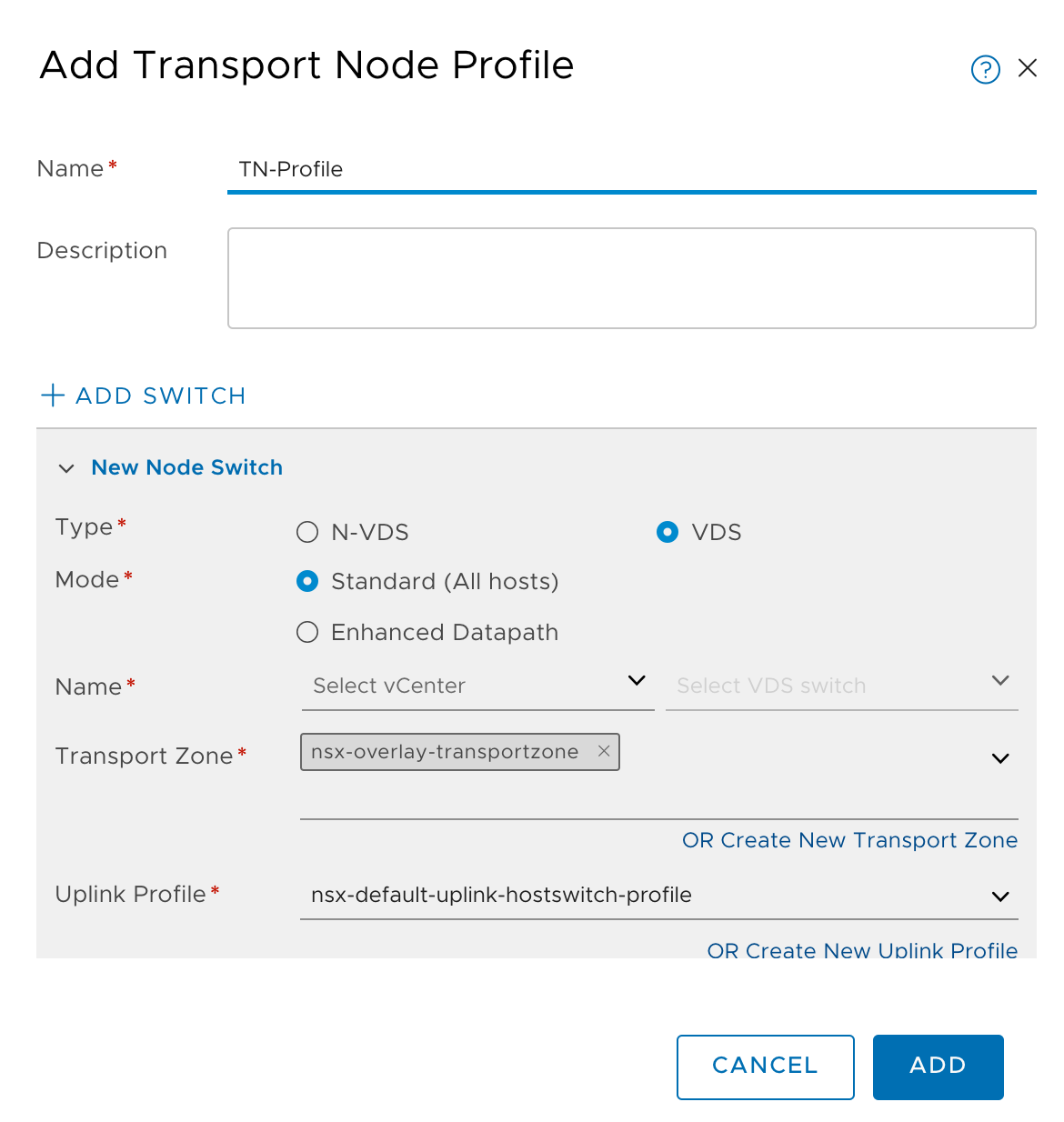

Your ESXi cluster can be prepared for NSX-T by assigning a transport node profile to the hosts. This transport node profile contains an Uplink profile. The uplink profile specifies which physical NICs are used on the ESXi host.

Your ESXi cluster can be prepared for NSX-T by assigning a transport node profile to the hosts. This transport node profile contains an Uplink profile. The uplink profile specifies which physical NICs are used on the ESXi host.

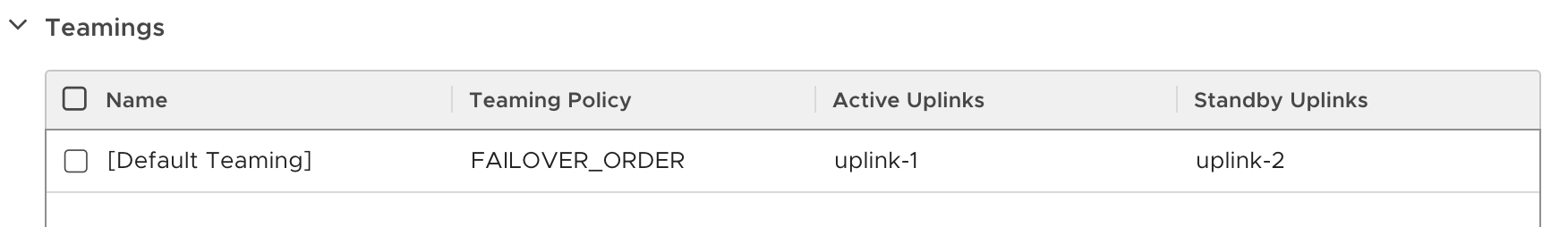

This is a default failover uplink profile with one uplink on standby and one active uplink.

This is a default failover uplink profile with one uplink on standby and one active uplink.

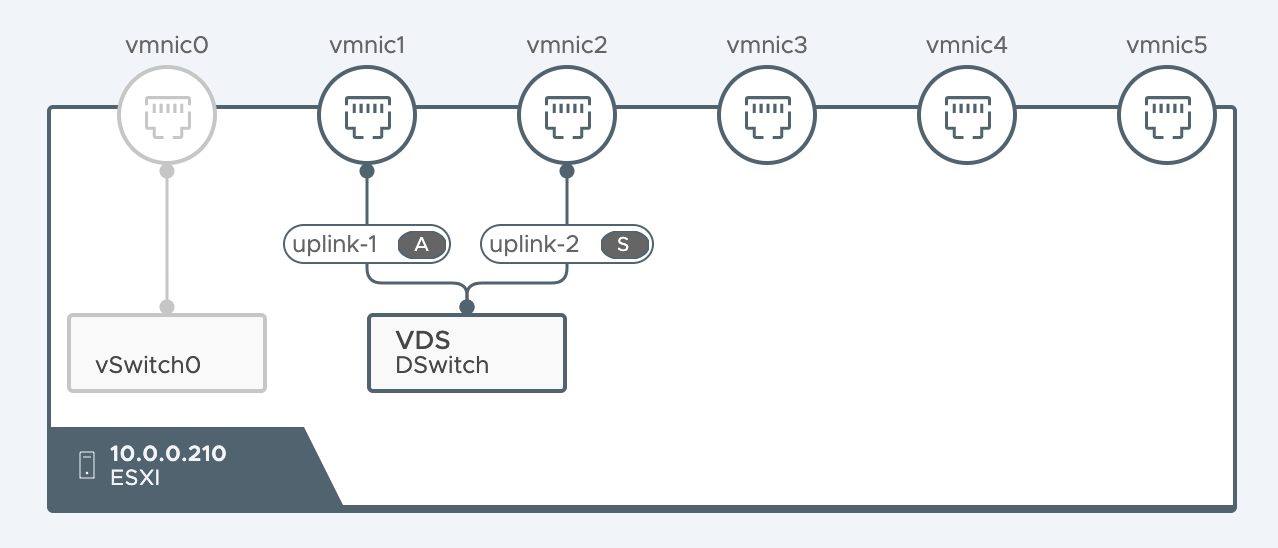

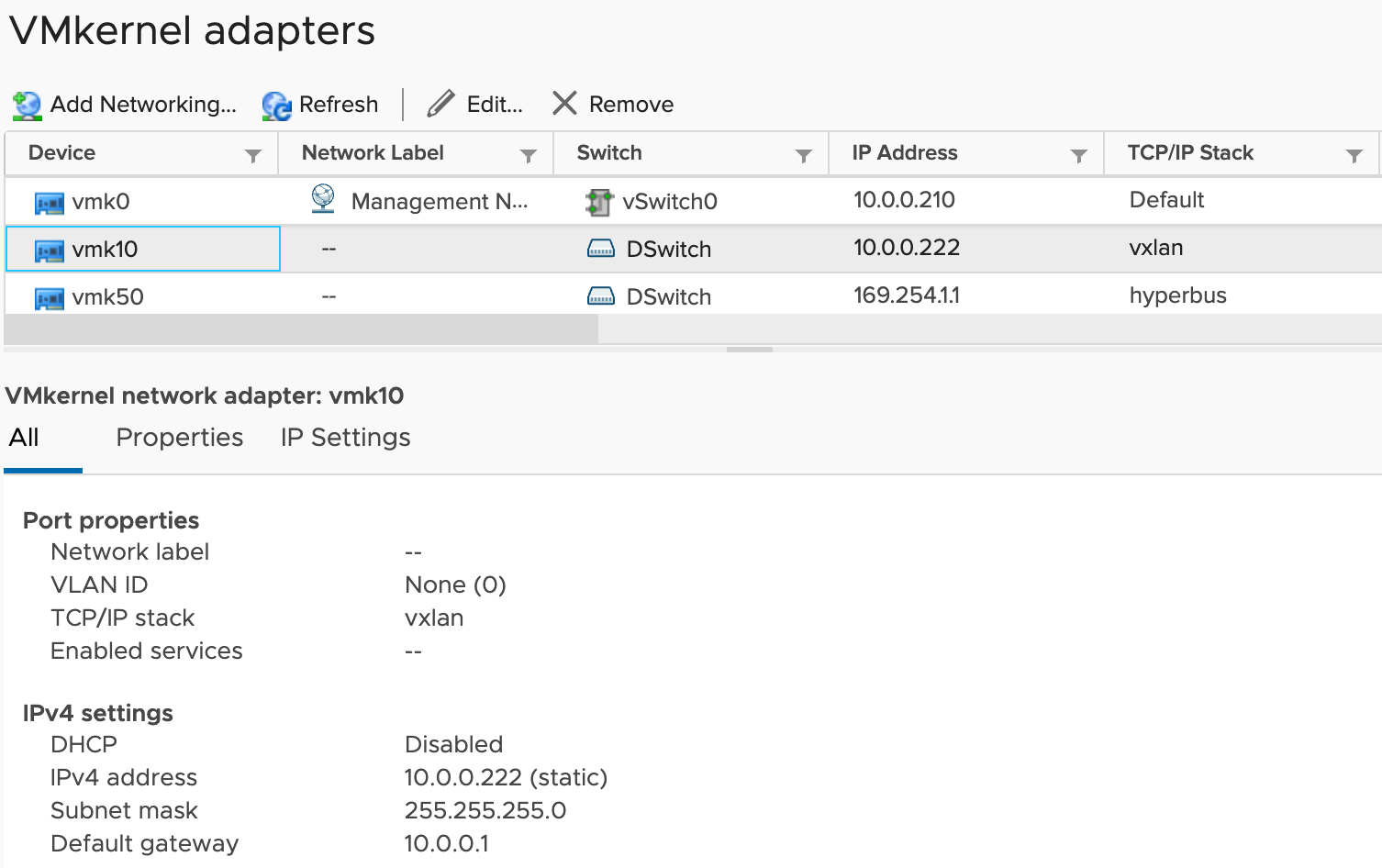

In the switch visualization tab, you can clearly see that the VDS DSwitch is mapped to vmnic1 and vmnic2. This configuration is owned by vCenter. The kernel port group that's used as a Geneve tunnel endpoint will be created on this VDS. However, the failover policy for this VMK10 is managed by NSX-T.

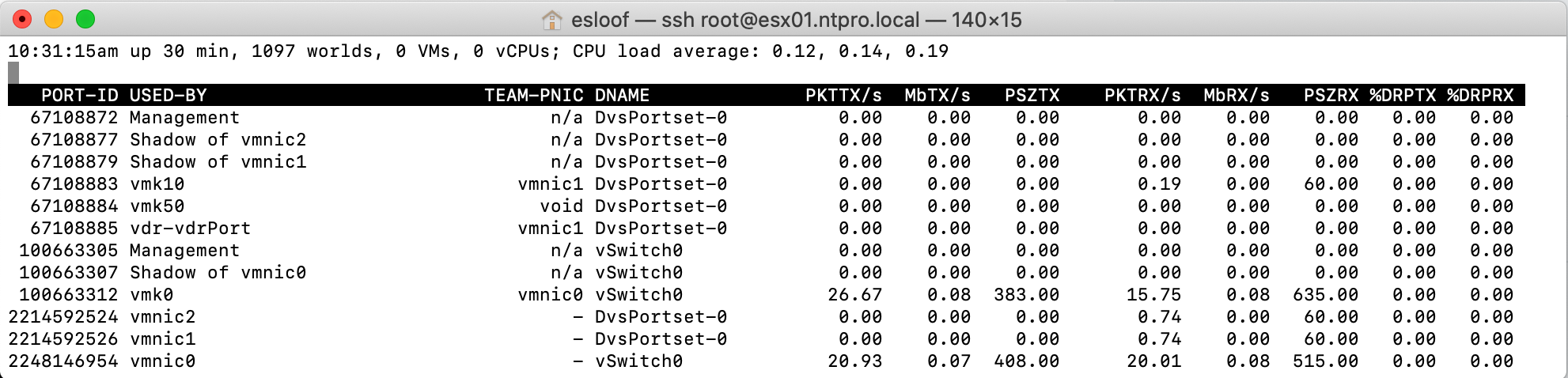

We can see that the kernel port group is listed on the ESXi host and configured with an IP address that comes from an IP pool managed my NSX-T. The switch that's used by VMK10 is a VDS managed by vCenter.

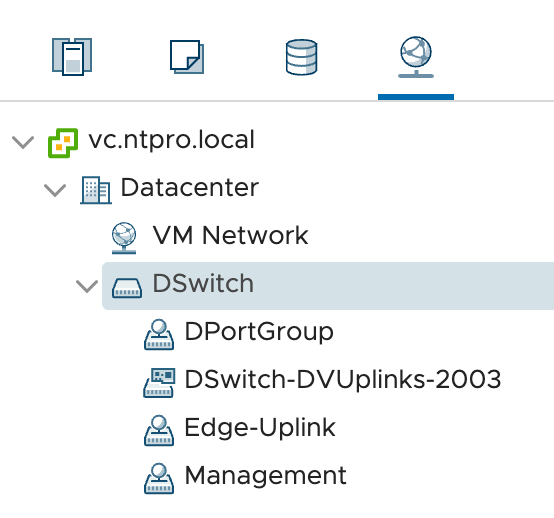

This is the Networking view in the vSphere Client. You notice there's no port group listed for managing the policies of VMK10. The policies are managed by NSX-T.

In esxtop we're able to validate the NIC mapping of the Geneve tunnel endpoint (VMK10). It's connected to VMNIC1. When vmnic1 fails it will jump to vmnic2.