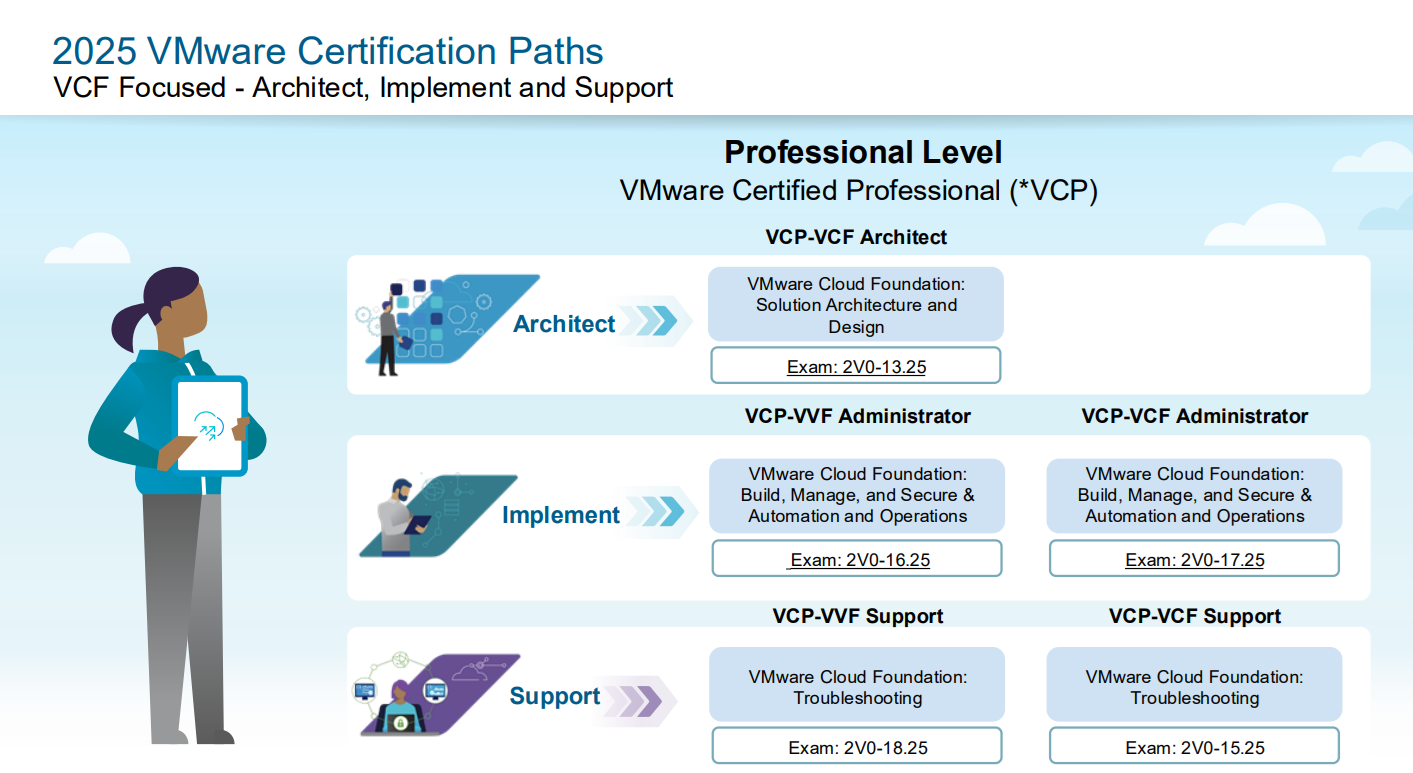

VMware by Broadcom has recently announced the release of five new professional certification exams based on version 9 of their Cloud Foundation Platform. These certifications represent a significant evolution in VMware's certification portfolio, reflecting the company's continued innovation in cloud foundation and virtualization technologies under Broadcom's stewardship.

The new certification lineup includes specialized tracks for both VMware Cloud Foundation (VCF) and VMware vSphere Foundation (VVF), covering essential roles from administration and support to architecture. Each certification is designed to validate the skills required for modern cloud infrastructure management, troubleshooting, and design in enterprise environments.

Overview of the New Certification Portfolio

The five new VMware Certified Professional (VCP) certifications are:

- VMware Certified Professional - VMware vSphere Foundation Support (2V0-18.25)

- VMware Certified Professional - VMware Cloud Foundation Administrator (2V0-17.25)

- VMware Certified Professional - VMware Cloud Foundation Support (2V0-15.25)

- VMware Certified Professional - VMware Cloud Foundation Architect (2V0-13.25)

- VMware Certified Professional - VMware vSphere Foundation Administrator (2V0-16.25)

These certifications address the growing demand for skilled professionals who can effectively deploy, manage, support, and architect private cloud environments using VMware's latest technologies. Each exam follows a consistent format with 60 multiple-choice questions, a 135-minute duration, and a passing score of 300 points, priced at $250 per attempt.

Detailed Examination of Each Certification

1. VMware Certified Professional - VMware vSphere Foundation Support (2V0-18.25)

The VMware Certified Professional - VMware vSphere Foundation Support certification represents a crucial entry point for IT professionals seeking to specialize in troubleshooting and supporting VMware vSphere Foundation environments.

This certification validates a candidate's proficiency in standard troubleshooting techniques and methodologies specifically tailored to issues encountered during the deployment and operation of VMware vSphere Foundation (VVF). The exam confirms a comprehensive understanding of VVF's architecture, including its core features, functions, components, and operational models.

Key technical areas covered in this certification include VCF Operations, vCenter Server administration, VMware ESX hypervisor management, VMware vSAN storage solutions, and VMware Operations for centralized logging and monitoring. Candidates who pursue this certification typically work in support roles where they are responsible for maintaining system availability and resolving technical issues that may arise in virtualized environments.

The certification is particularly valuable for IT professionals who are expanding their skill set from traditional infrastructure support roles into cloud-based virtualization support. It provides the foundational knowledge necessary to effectively troubleshoot complex virtualized environments and ensure optimal performance of critical business applications.

For those interested in pursuing this certification, the Exam Study Guide provides comprehensive preparation materials, while exam registration can be completed through Broadcom's certification portal.

2. VMware Certified Professional - VMware Cloud Foundation Administrator (2V0-17.25)

The VMware Certified Professional - VMware Cloud Foundation Administrator certification is designed for IT professionals who are responsible for the day-to-day administration and management of VMware Cloud Foundation environments.

This certification validates the comprehensive skills required to deploy, manage, and support private cloud environments built on VMware Cloud Foundation (VCF). The certification is specifically designed for IT professionals who are transitioning from traditional infrastructure roles to cloud administration responsibilities, reflecting the industry's ongoing shift toward cloud-first architectures.

Candidates for this certification typically include system administrators, cloud administrators, and infrastructure specialists who are responsible for implementing and maintaining VCF infrastructure. The certification ensures that professionals can effectively manage VCF environments to meet organizational service level objectives for availability, performance, and security.

The curriculum covers essential aspects of VCF administration, including initial deployment procedures, ongoing management tasks, performance optimization, security configuration, and troubleshooting common issues. By achieving this certification, professionals demonstrate their ability to effectively operate complex VCF environments and contribute to their organization's cloud transformation initiatives.

The certification is supported by recommended training courses including "VMware Cloud Foundation: Build, Manage, and Secure" and "VMware Cloud Foundation: Automate and Operate," which provide hands-on experience with the platform's administrative capabilities.

Professionals can access the Exam Study Guide for detailed preparation information and complete their exam registration through the official Broadcom portal.

3. VMware Certified Professional - VMware Cloud Foundation Support (2V0-15.25)

The VMware Certified Professional - VMware Cloud Foundation Support certification addresses the critical need for specialized support professionals in VMware Cloud Foundation environments.

This certification validates the skills required to support and troubleshoot private cloud environments built on VMware Cloud Foundation (VCF). It is specifically designed for IT professionals who are expanding from existing support infrastructure roles into cloud administration and Site Reliability Engineer (SRE) positions, reflecting the evolving nature of IT support roles in modern organizations.

The certification targets professionals who are responsible for implementing, maintaining, and supporting VCF infrastructure in ways that contribute to organizational service level objectives for availability, performance, and security. This includes proactive monitoring, incident response, performance tuning, and preventive maintenance activities.

Candidates who achieve this certification demonstrate their ability to effectively troubleshoot complex issues within private cloud environments based on VCF. The certification covers advanced troubleshooting methodologies, diagnostic tools and techniques, performance analysis, and resolution strategies for common and complex issues that may arise in VCF deployments.

The recommended preparation includes the "VMware Cloud Foundation: Troubleshooting" course, which provides hands-on experience with diagnostic tools and troubleshooting scenarios commonly encountered in production environments.

The certification is supported by comprehensive study materials available through the Exam Study Guide, and candidates can complete their exam registration through Broadcom's official certification portal.

4. VMware Certified Professional - VMware Cloud Foundation Architect (2V0-13.25)

The VMware Certified Professional - VMware Cloud Foundation Architect certification represents the most advanced level of expertise in VMware Cloud Foundation design and architecture.

This certification validates a candidate's advanced skills in designing comprehensive VCF solutions that address complex organizational requirements. The certification covers critical design areas including manageability, availability, performance, recovery, and security, as well as advanced topics such as monitoring, capacity planning, and migration strategies.

The certification is specifically targeted at infrastructure architects and consultants who are responsible for designing scalable, resilient, and secure cloud foundation solutions. These professionals typically work with organizations to assess requirements, design appropriate solutions, and provide guidance on implementation best practices.

The curriculum encompasses advanced architectural concepts including solution design methodologies, capacity planning and sizing, high availability and disaster recovery design, security architecture, and integration with existing infrastructure. Candidates learn to balance competing requirements such as performance, cost, security, and operational complexity to deliver optimal solutions.

The certification validates expertise in creating comprehensive design documentation, conducting technical reviews, and providing architectural guidance throughout the implementation lifecycle. Professionals who achieve this certification are equipped to lead complex VCF implementation projects and serve as technical authorities within their organizations.

Preparation for this certification is supported by the "VMware Cloud Foundation: Solution Architecture and Design" course, which provides comprehensive coverage of architectural principles and design methodologies.

Detailed preparation materials are available through the Exam Study Guide, and exam registration can be completed through the official certification portal.

5. VMware Certified Professional - VMware vSphere Foundation Administrator (2V0-16.25)

The VMware Certified Professional - VMware vSphere Foundation Administrator certification provides essential validation for professionals responsible for administering VMware vSphere Foundation environments.

This certification validates the skills required to deploy, manage, and support private cloud environments built on VMware vSphere Foundation (VVF). Similar to its Cloud Foundation counterpart, this certification is designed for IT professionals who are expanding from traditional infrastructure roles to cloud administration, but with a specific focus on vSphere Foundation technologies.

The certification targets professionals who are responsible for implementing and maintaining VVF infrastructure while ensuring it meets organizational service level objectives for availability, performance, and security. This includes tasks such as virtual machine management, resource allocation, performance monitoring, and security configuration.

Candidates who pursue this certification typically work in roles where they are responsible for the day-to-day administration of virtualized environments, including virtual machine lifecycle management, storage and network configuration, and user access management. The certification ensures that professionals have the knowledge and skills necessary to effectively operate VVF environments in production settings.

The curriculum covers essential administrative tasks including initial deployment and configuration, ongoing management and maintenance, performance optimization, security implementation, and troubleshooting common issues. By achieving this certification, professionals demonstrate their competency in managing complex virtualized environments and contributing to their organization's infrastructure reliability and performance.

The certification is supported by the "vSphere Foundation: Build, Manage and Secure" course, which provides practical experience with the platform's administrative capabilities and best practices.

Comprehensive study materials are available through the Exam Study Guide, and candidates can complete their exam registration through the official Broadcom certification portal.

Strategic Importance of These Certifications

The introduction of these five new certifications reflects VMware by Broadcom's commitment to maintaining industry leadership in cloud infrastructure technologies. Each certification addresses specific market needs and career paths, providing professionals with clear progression opportunities within the VMware ecosystem.

The certifications are strategically designed to address the growing complexity of modern IT environments, where organizations require specialized expertise in areas such as cloud administration, support, and architecture. By offering distinct certification tracks for different roles and technologies, VMware enables professionals to develop focused expertise that aligns with their career objectives and organizational needs.

Furthermore, the version 9 foundation of these certifications ensures that certified professionals are equipped with knowledge of the latest features, capabilities, and best practices. This is particularly important in the rapidly evolving cloud infrastructure landscape, where staying current with technology developments is essential for professional success.

Conclusion

VMware by Broadcom's release of these five new certification exams represents a significant opportunity for IT professionals to validate their expertise in cutting-edge cloud infrastructure technologies. Each certification provides a distinct pathway for career development, whether in support, administration, or architecture roles.

The comprehensive nature of these certifications, combined with their focus on practical skills and real-world scenarios, ensures that certified professionals are well-prepared to contribute effectively to their organizations' cloud infrastructure initiatives. As organizations continue to adopt and expand their use of VMware technologies, the demand for certified professionals in these areas is expected to grow significantly.

For IT professionals considering these certifications, the investment in preparation and examination fees represents a valuable opportunity to advance their careers and contribute to their organizations' technological success. The certifications provide not only validation of technical skills but also demonstrate commitment to professional development and staying current with industry best practices.

References and Resources

- VMware Certified Professional - VMware vSphere Foundation Support

- VMware Certified Professional - VMware Cloud Foundation Administrator

- VMware Certified Professional - VMware Cloud Foundation Support

- VMware Certified Professional - VMware Cloud Foundation Architect

- VMware Certified Professional - VMware vSphere Foundation Administrator

Met de komst van VMware Cloud Foundation (VCF) 9 is het VMware-landschap ingrijpend veranderd. Nieuwe versies van vSphere 9, vSAN 9, NSX 9 en de volledige automation- en operations-stacks worden voortaan alleen nog beschikbaar gesteld via de VCF-distributie. Voor veel organisaties betekent dit dat het traditionele beheer van losse clusters en afzonderlijke lifecycle management tools plaats moet maken voor een geïntegreerd platform.

Met de komst van VMware Cloud Foundation (VCF) 9 is het VMware-landschap ingrijpend veranderd. Nieuwe versies van vSphere 9, vSAN 9, NSX 9 en de volledige automation- en operations-stacks worden voortaan alleen nog beschikbaar gesteld via de VCF-distributie. Voor veel organisaties betekent dit dat het traditionele beheer van losse clusters en afzonderlijke lifecycle management tools plaats moet maken voor een geïntegreerd platform.